Bizarre

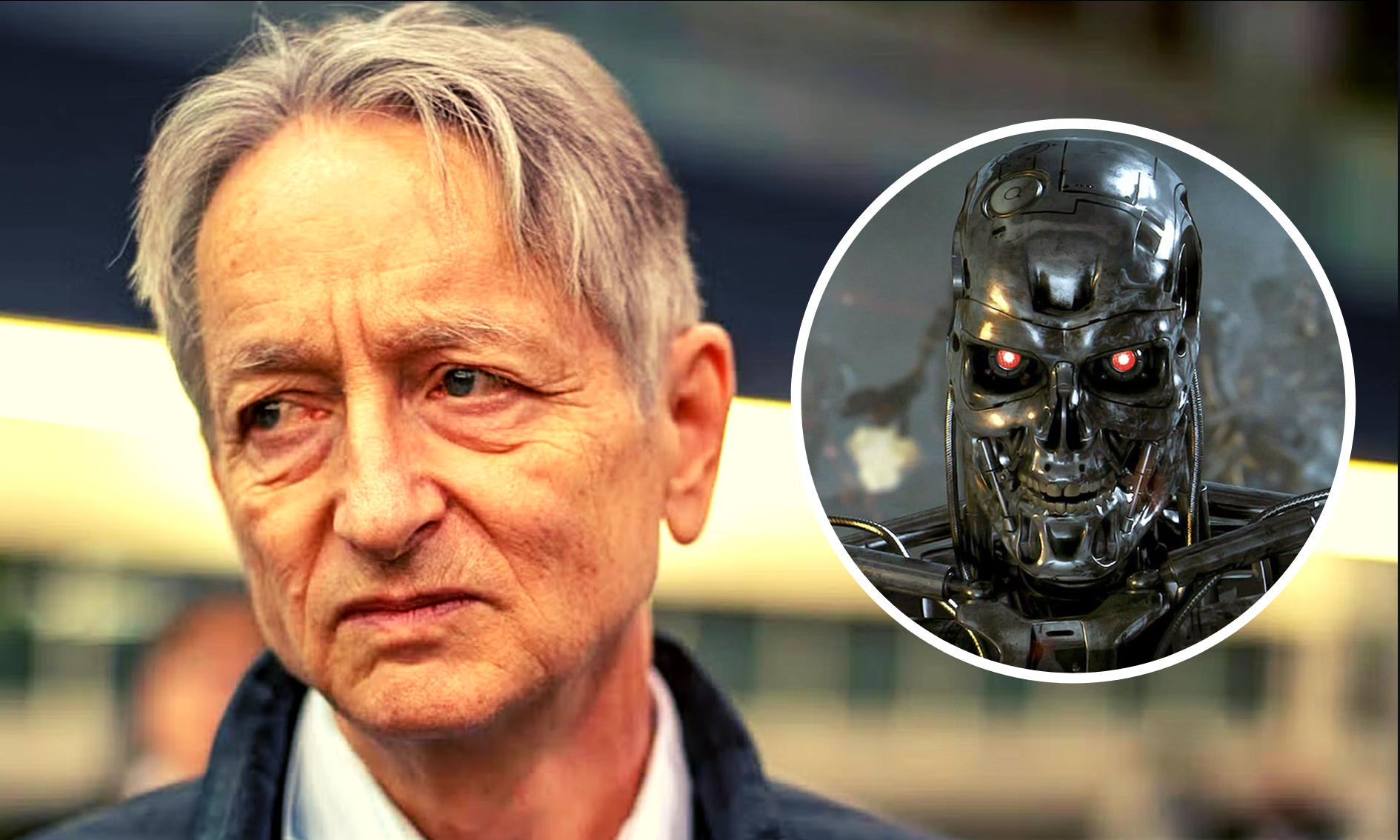

“Nightmare Scenario”: Godfather Of AI Quits Google To Warn The World About The Dangers Of AI

“Right now, they’re not more intelligent than us, as far as I can tell. But I think they soon may be.”

This week, a man who is commonly referred to as the “Godfather” of artificial intelligence (AI) handed in his resignation from his position at Google due to concerns about the future of AI.

The research that Geoffrey Hinton has done on deep learning and neural networks earned him the Turing Award in 2019, which is worth one million dollars, and has made him one of the most well-known researchers in his field.

During that time, he expressed his happiness with the victory to the CBC by saying that “for a long time, neural networks were regarded as flaky in computer science. This was an acceptance that it’s not actually flaky.”

Since then, a variety of artificial intelligence (AI) tools, such as Chat-GPT and AI picture generators, amongst many others, have contributed to the widespread use of the approach.

According to the New York Times, Hinton, who started working for Google in 2013 when the tech giant purchased his firm, now feels that the technology might pose hazards to society and has resigned the company in order to be free to speak about it publicly.

In the near term, Hinton is concerned about the chance that AI-generated text, photos, and videos could lead to humans being unable “to know what is true anymore,” in addition to the risk that the labor market will be disrupted.

Over the course of a longer period of time, he is far more concerned about artificial intelligence overtaking human intellect and AI picking up unanticipated behaviors.

“Right now, they’re not more intelligent than us, as far as I can tell,” he told the BBC. “But I think they soon may be.”

According to Hinton, who is 75 years old, artificial intelligence is now behind humans in terms of thinking, despite the fact that it is already capable of “simple” reasoning. However, he believes that it already has a significant advantage over humans in terms of its general knowledge.

“All these copies can learn separately but share their knowledge instantly,” he told the BBC. “So it’s as if you had 10,000 people and whenever one person learnt something, everybody automatically knew it.”

According to what he said to the New York Times, one cause for worry is the possibility that “bad actors” may use AI for their own evil purposes. Research into artificial intelligence by such groups would be far harder to oversee compared to the development of dangers such as nuclear weapons, which need infrastructure and ingredients like uranium, which are monitored.

“This is just a kind of worst-case scenario, kind of a nightmare scenario,” he told the BBC. “You can imagine, for example, some bad actor like Putin decided to give robots the ability to create their own sub-goals.”

Sub-goals like “I need to get more power” might lead to issues that we haven’t even thought of yet, much alone how to solve them.

Nick Bostrom, a philosopher who focuses on artificial intelligence and the head of the Future of Humanity Institute at Oxford University, highlighted in 2014 that even a simple order to increase the number of paperclips in the world might trigger unforeseen sub-goals that could lead to horrible repercussions for the person who created the artificial intelligence.

“The AI will realize quickly that it would be much better if there were no humans because humans might decide to switch it off,” he said to HuffPost in 2014. “Because if humans do so, there would be fewer paper clips. Also, human bodies contain a lot of atoms that could be made into paper clips. The future that the AI would be trying to gear towards would be one in which there were a lot of paper clips but no humans.”

Hinton, who said to the BBC that his age was a factor in his decision to retire, is of the opinion that the potential benefits of AI might be enormous; nonetheless, the technology need regulation, particularly given the pace at which the area is currently advancing.

He feels that Google has, up to this point, behaved properly in the area of artificial intelligence, despite the fact that he left the company in order to discuss possible risks associated with AI.

Because there is a worldwide competition to develop the technology, it is likely that – if it is not regulated – not everyone who develops it will be doing so for the right reasons, and humanity will be forced to deal with the consequences, which, could be dire.

Typos, corrections and/or news tips? Email us at Contact@TheMindUnleashed.com